Dario Amodei Isn’t Trying to Scare You — He Wants You to Slow Down

The Anthropic CEO isn't warning about evil AI — he's warning about what happens when technology begins moving faster than society can respond.

Introduction

Every generation tends to believe it is living through the fastest technological shift in history. For the first time, that belief may not be overstated.

I still remember when people needed the internet explained to them. Back then, it was easy to underestimate it. It seemed interesting and maybe promising, but not essential. Big changes often look small right up until the moment they aren't.

Sometimes, someone in tech speaks honestly about what's really happening. It's not an investor pitch or conference talk full of optimism. It's not a perfect future where everything gets better and nothing goes wrong. Dario Amodei seems to be doing just that.

After years in technology, I can tell when something is marketing and when it's a real concern. This didn't sound like marketing.

The Genius Nation Inside a Data Center

When I read his 20,000-word essay, "The Adolescence of Technology," about where artificial intelligence is headed, what stood out to me wasn't fear or hype — it was restraint[1]. The CEO of Anthropic chose to write about risk, not because disaster is certain, but because moving too fast without coordination has often led societies into trouble.

Many people have noticed Amodei's phrase about a possible future: a "genius nation inside a data center." Sure, it's a memorable image, but like most metaphors, it can be misunderstood. He's not saying we'll create digital citizens with their own ambitions or identities. Instead, he's highlighting something more technical and possibly more important: the fast concentration of thinking power in systems that work at machine speed, not human speed.

If that sounds hard to imagine, think about what happens inside a modern data center: millions of tasks are solved at the same time. Now imagine those systems getting better and better at "thinking." It doesn't take long before that stops feeling theoretical.

I've seen server rooms change from loud, hidden spaces to becoming the backbone of everything we do today. So that image feels less far-fetched to me than it might at first glance.

A Mismatch of Speed

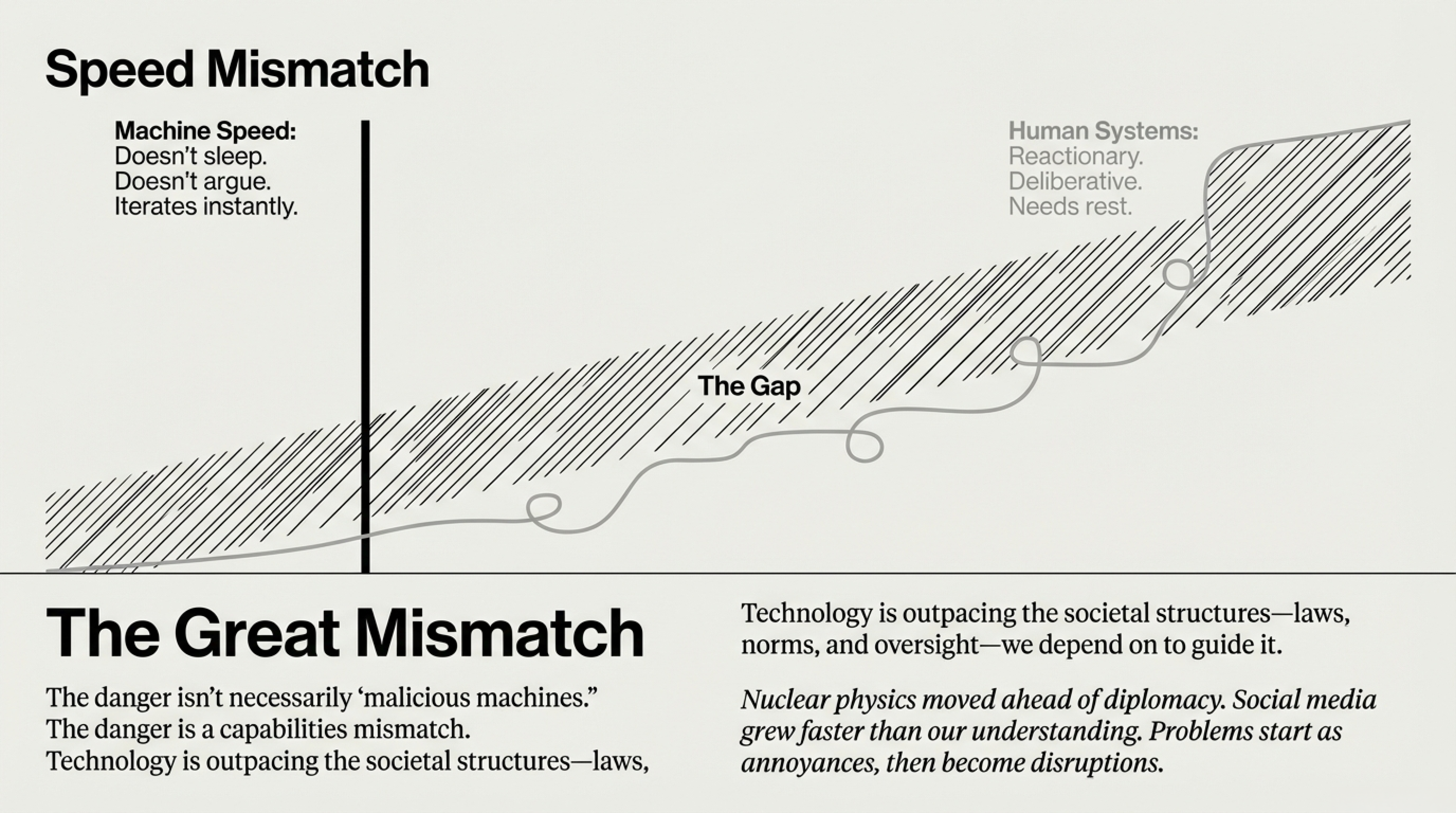

For decades, our economic, political, and social systems have been built on the idea that human thinking sets the speed of progress. Artificial intelligence is beginning to change that. These systems don't get tired or need rest, and they don't argue unless we design them to. They just get things done.

Technology has always moved quickly. What's new is that speed is now paired with the ability to make decisions. Amodei's real warning isn't about machines suddenly turning malicious. It's that their capabilities may outpace the societal structures we depend on to guide them.

History shows that this kind of mismatch matters. When technology moves faster than rules and oversight, problems usually don't appear all at once. They start as small annoyances, then become bigger disruptions, and only later do we realize we should have paid attention much earlier.

We've seen this happen before. Nuclear physics moved ahead of diplomacy. Social media grew faster than we understood its effects on young people. Each time, society adapted, but usually not before some harm was done.

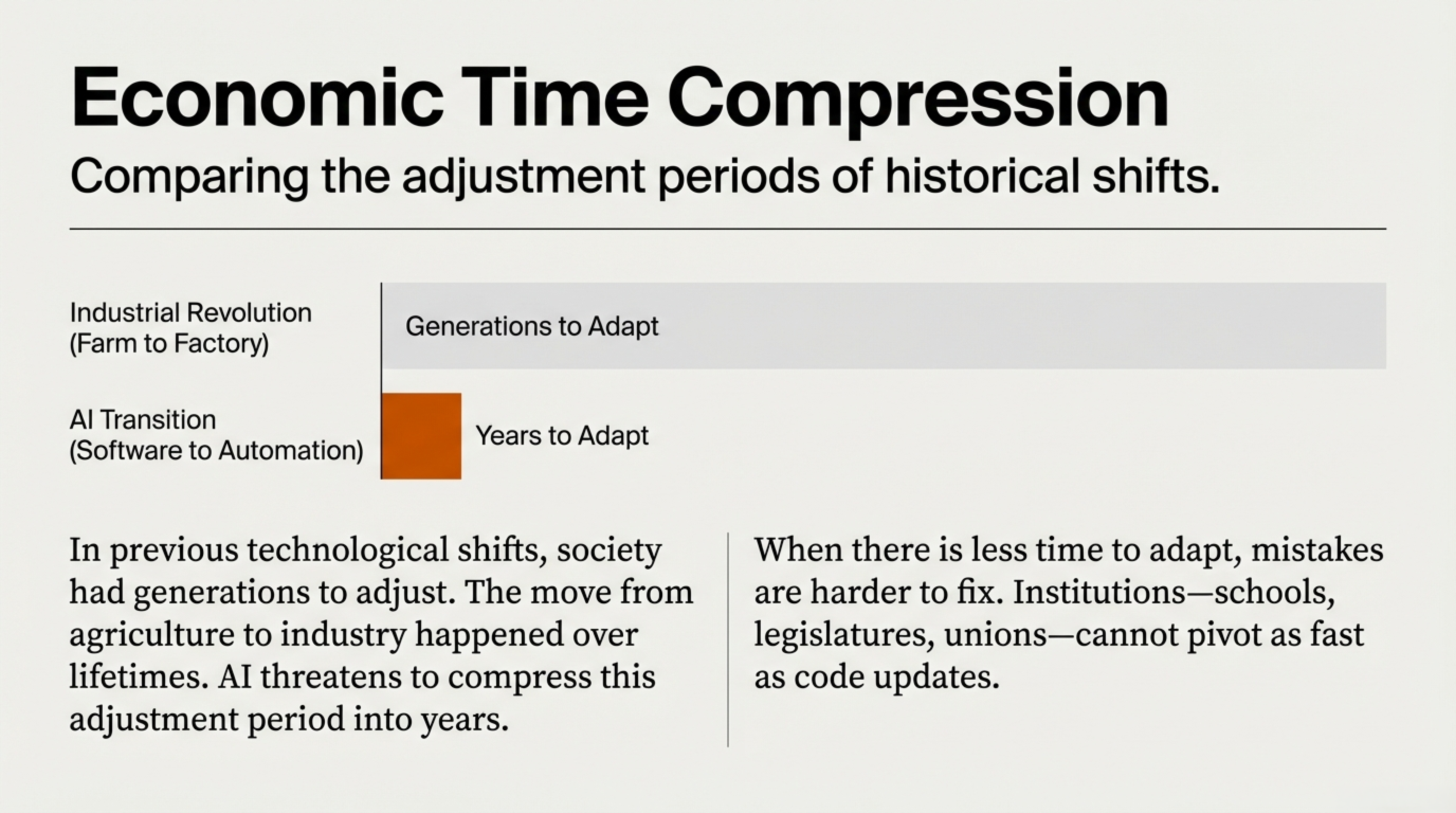

Economic Time Compression

Amodei talks about economic "time compression," and this is his strongest point. In the past, major industry changes occurred over generations. People moved from farms to factories and then from factories to service jobs. Society had time to adjust.

Artificial intelligence could make that societal adjustment period much shorter. When there's less time to adapt, mistakes are harder to fix. Institutions can't change as quickly as software does.

We're already seeing early signs of change in jobs that once seemed safe from automation. Coding assistants now write ready-to-use software. AI tools help summarize legal cases, create marketing plans, and handle medical paperwork. While this doesn't mean that whole professions will vanish overnight, it does mean the path to job security may look different for the next generation.

Changes like this don't usually come with a warning. They often show up as new tools that promise to make us more productive. This raises a quieter question that Amodei hints at: if productivity isn't linked to human work, how do people maintain a sense of purpose? We can change economic systems, but people's sense of meaning changes more slowly. If society separates dignity from traditional jobs, we'll have to rethink what it means to contribute and find meaning in our lives.

Ask anyone whose skills have suddenly become outdated — the impact isn't just about money. It feels deeply personal.

Beyond Headlines

Not every risk in these discussions is equally likely. Some scenarios, such as a single person using AI to create a world-ending biological threat, remain science fiction. Real-world limits still matter. You still need labs, materials, expertise, and logistics — software alone can't make those appear.

Engineers usually think in terms of probabilities, while headlines prefer certainty. The truth is usually somewhere in between.

In the same way, talk about superintelligence arriving soon makes for good headlines but hides the fact that technology moves in uncertain ways. Researchers think in terms of trends and possibilities, not countdowns. The future almost never arrives on time, and when it does, it rarely looks the way we expected. Still, it would be a mistake to ignore the bigger warning.

What sets Amodei apart from others in the industry is that he seems less focused on making a splash and more on what could be called intentional friction — the idea that powerful systems should have built-in limits, and that regulation, even if not perfect, is better than waiting until after something goes wrong.

Anthropic's focus on rules and preventing misuse shows this way of thinking. Some people might say every company claims to be responsible. Still, it's worth noting when a lab openly supports oversight that could slow down its own progress.

Technological Adolescence

All of this points to a change in how power might be gathered. Advanced AI requires huge computing resources, special skills, and a lot of money. That naturally puts influence in just a few mega-corporations. Whether markets and governments can keep things balanced is still an open question, but it's one we should ask now, while we can still shape the answers.

Maybe the most important lesson from Amodei's essay isn't a prediction, but an attitude. He wants us to take intelligence amplification as seriously as earlier generations took nuclear technology, aerospace safety, and biotechnology, areas where new capabilities forced people to grow up fast.

The goal isn't to panic. It's to be prepared. But being prepared takes something the tech industry isn't always known for: patience.

In my personal view, the biggest risk isn't that AI suddenly turns on us. It's that we don't realize how quickly society needs to adapt to tools that change faster than our habits. People are remarkably good at adjusting — but adjustment is far easier when we give ourselves time to see what's coming.

We've become very good at building machines that expand what we can do. The next challenge isn't just about what these machines can do, but whether we can bring them into our lives in a way that keeps people in control.

As Amodei puts it, technological adolescence is always an awkward stage. It challenges our judgment, patience, and self-control. Civilizations don't usually fail because they invent powerful tools — they fail when they think wisdom grows automatically with new abilities.

After reading Amodei's essay, I felt more thoughtful than worried. That alone feels like progress. It's not that the risks are small, but that people are finally starting to pay attention to what's possible. That doesn't guarantee we'll make the right choices. But it does make good outcomes more likely.

Amodei's essay doesn't sound like an alarm. Instead, it feels like a request: keep moving forward, but do it carefully.

Progress has never been optional. But real progress — the kind that remembers people have to live with the systems we create — has always needed careful planning.

And the choices we make during this period of technological adolescence may quietly determine whether intelligence remains our greatest tool or becomes the force that reshapes the world faster than we are prepared to follow.

The future rarely announces itself. Often, it becomes obvious only after the decisions that shaped it have already been made…

Listen in — A meditative auditory companion to our reflection on technology’s transition

Context and Perspective

Primary Source

[1] Dario Amodei — "The Adolescence of Technology."

A long-form essay examining the societal, economic, and governance challenges emerging alongside

increasingly capable AI systems.

Further Reading

[2] Shane Collins — "Anthropic's CEO Just Dropped a 20,000-Word Warning About

2027."

A widely circulated synthesis that helped bring Amodei's arguments into broader public

discussion.

[3] Axios — Coverage of Dario Amodei's AI warnings.

Reporting focused on labor disruption, geopolitical competition, and safety considerations.

[4] Fortune — Analysis of Amodei's governance proposals.

Examines the growing tension between rapid innovation and regulatory oversight inside leading AI

labs.